一、功能描述

前提条件:pip3 install beautifulsoup4 requests argparse

使用 Python 执行脚本,传入两个参数,第一个参数为番号(不要求大小写),第二个参数为 代理地址(missav被墙了,必须通过代理访问)。

二、源码

import sys

import argparse

import requests

from bs4 import BeautifulSoup

def get_video_url(av_code, proxy_url):

header = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36',

'Content-Type': 'application/json'

}

# 设置代理

proxies = {

"http": proxy_url,

"https": proxy_url

}

# 转换为小写

av_code = av_code.lower()

url = f"https://missav.com/cn/search/{av_code}"

response = requests.get(url, proxies=proxies, headers=header)

html_content = response.text

# 解析HTML

soup = BeautifulSoup(html_content, 'html.parser')

# 去重集合

unique_links = set()

# 提取所有 a 标签,筛选出 alt 属性包含avcode的链接

for a_tag in soup.find_all('a'):

alt_text = a_tag.get('alt')

if alt_text and av_code in alt_text:

unique_links.add(a_tag.get('href'))

links = list(unique_links)

return links

def get_magnet_links(link, proxy_url):

header = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36',

'Content-Type': 'application/json'

}

# 设置代理

proxies = {

"http": proxy_url,

"https": proxy_url

}

response = requests.get(link, proxies=proxies, headers=header)

html_content = response.text

# 创建 BeautifulSoup 对象

soup = BeautifulSoup(html_content, 'html.parser')

# 提取指定class的table

target_table = soup.find('table', class_='min-w-full')

# 检查是否找到了目标表格

if target_table is not None:

rows = target_table.find_all('tr')

# 遍历每一行

for row in rows:

# 提取该行内的所有<td>标签

cols = row.find_all('td')

# 存储该行的数据

data = []

# 遍历每一列

for col in cols:

# 查找带有rel="nofollow"的<a>标签

links = col.find_all('a', rel='nofollow')

# 如果找到带有rel="nofollow"的<a>标签,则提取href属性

if links:

for link in links:

href = link['href']

if "keepshare.org" not in href:

data.append(href)

# 同时提取文本内容

text = col.get_text(strip=True)

if text != "下载" and "keepshare.org" not in text:

data.append(text)

# 打印数据

print(data)

else:

print("未找到包含磁力链接的表格")

def main(av_code, proxy_url):

links = get_video_url(av_code, proxy_url)

for link in links:

get_magnet_links(link, proxy_url)

if __name__ == "__main__":

parser = argparse.ArgumentParser(description="MissAV Spider Script")

parser.add_argument("av_code", help="The AV code to search for.")

parser.add_argument("proxy_url", help="The URL of the proxy server.")

args = parser.parse_args()

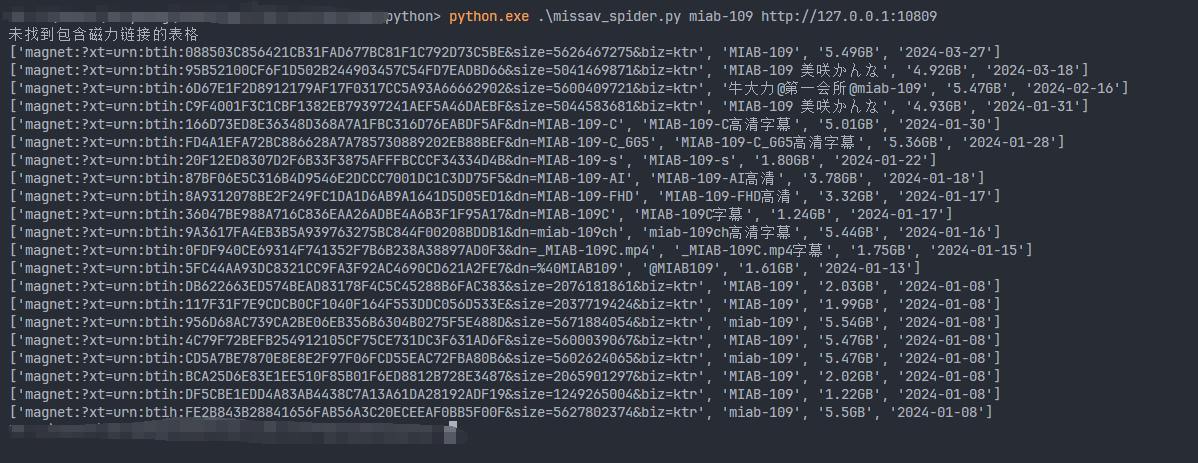

main(args.av_code, args.proxy_url)三、效果截图

四、可执行文件

Windows:

3 条评论

使用了代理,也加了请求头,missav报403错误.访问其他外网网站也没问题

这个和代理的ip有关系,missav前面套了cf,有部分ip是通过不了cf的反爬策略的,可以直接用我的api

使用了代理,也加了请求头,missav报403错误.访问其他外网网站也没问题